What is robots.txt?

We’ve shared everything you need to know about robots.txt in this article

Brief Summary

Robots.txt is a file that tells search engines which pages on a website they are allowed to access and which ones they should not access. It helps website owners control how their website is crawled and indexed by search engines.

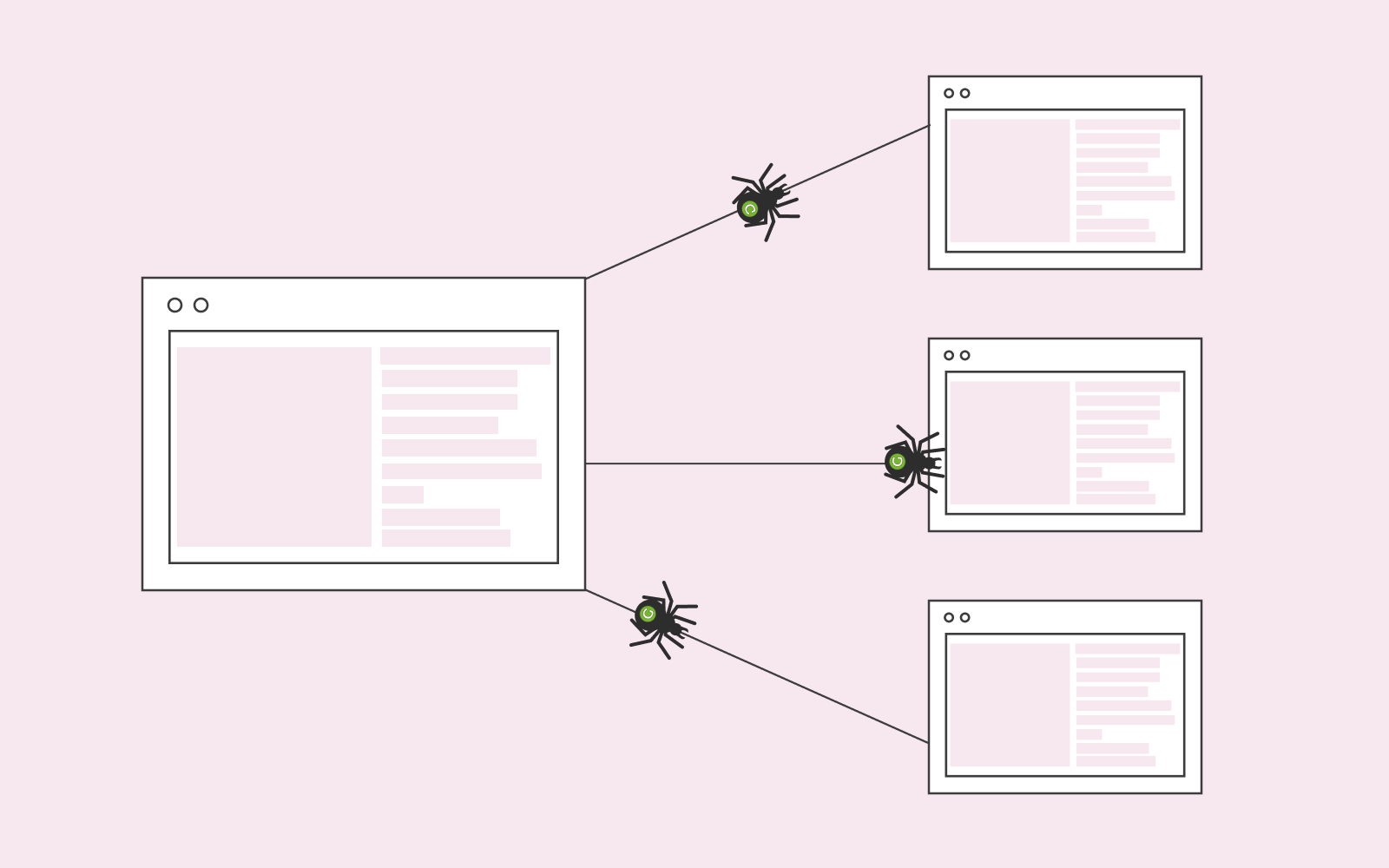

The first thing you should know is that the robots.txt file lives on your website. You should also know that the smart search engine spiders will automatically look for your robots.txt to receive instructions before crawling your website. Thus, you should always have your robots.txt file in your root directory as that is the first place the spiders will go to look for instructions.

You can simply check to see if you have the robots.txt on your website by searching www.thenameofyourewebsite.com/robots.txt.

Google recommends everyone who owns a website to have robots.txt. If Google’s crawlers can’t find your robots.txt file, it’ll most likely result in the search engine not crawling all the important pages on your website. A search engine’s job is to crawl and index your website to be accessible to people.

What does robots.txt do?

Robots.txt enables you to block parts of your website and index other parts of your website. You can choose to either ‘Allow’ or ‘Disallow’ certain pages and folders on your website.

If you allow certain pages, you’re allowing the spiders to go to that specific area of your website and index it. Conversely, disallow means that you don’t want the spiders to find certain pages and areas of your website.

Robots.txt allows your website to be available and accessible on search engines. So, for example, if you have a contact page on your website, you can decide to block that page, with the help of robots.txt, so that Google can crawl other important pages on your website and not spend time on crawling a page that you don’t really care if people have access to the page or not from the search engine.

By doing this, you’re telling Google not to crawl the content of your contact page. Of course, you can also block your website entirely with robots.txt if that’s something you wish to do.

How does robots.txt work?

There are various crawler types out there for different search engines and platforms. We usually call these crawlers ‘User Agent’. So when you decide to allow or disallow a particular area of your website, you can choose to identify the specific crawler, for example, Googlebot (Google’s crawlers), as the User-Agent, or you can just use a * and refer to all crawlers that live on the world wide web.

You can get very advanced with robots.txt. For example, you can block URLs, directories, or even specific URL parameters.

The same technique applies to pages that you want Google to crawl and put extra attention on. You can index these favoured pages with the help of robots.txt.

Time delays

You can include time delays in your robots.txt file. For example, you might not want a crawler to crawl your website as quickly, so you can put in time delays. Meaning: you’re telling the spiders now to crawl your website until a time delay has been specified.

You should use the time delay to ensure that the crawlers do not overload your web server.

However, do keep in mind that Google does not support time delays. If you don’t want to overload your web server with Google bots, you can instead use crawl rate. You can choose your preferred crawl rate in Google Search Console and try to slow down the spiders.

Pattern matching

If you have a more extensive website, you might consider implementing pattern matching. Whether it’s Google or any other search engine, you can instruct these search engines to go through and crawl your pages based on a set of rules.

Pattern matching entails a set of rules that you want the crawlers to follow. For example, you might want to block URL’s that have the word ‘website’.

Why should you use robots.txt?

Many people use robots.txt to disallow third parties from crawling their websites. However, there are more than just search engines crawling your website; other third parties also constantly try to access your website. Consequently, all that crawling on your website slows down your website and your server, resulting in a negative user experience. Additionally, these third party widgets can cause server issues that you need to solve.

You can also use robots.txt to disallow third parties from copying content from your website or analysing changes you make to your website. robots.txt is an excellent way to block things that you don’t want on your website.

Do keep in mind that if a third party is very interested in your website, they can use software, like Screaming Frog, that allows them to ignore the ‘block’ and still crawl your website. Thus, you shouldn’t rely a hundred per cent on robots.txt when it comes to protecting certain aspects of your website.

Robots.txt checker

You can use Google Search Console to see if you’re blocking a page with the robots.txt checker. It’s good to check that from time to time to ensure that you haven’t blocked an important page by accident.

Another great resource to test and validate your robots.txt file is to use Rank Maths robots.txt Tester & Validator. With this tool, you can ensure how search engine crawlers interpret your file, so you don’t risk missing out on important pages being crawled.

Be very careful when you work with robots.txt. It can cause serious harm to your website if you accidentally block your entire website from indexing.